Machine learning for Regenerative Peripheral Nerve Interface-based prosthetic control: current applications and clinical translation

Abstract

Machine learning algorithms and control systems have changed the design of modern-day prosthetic devices. This narrative review explores the evolution and application of machine learning in advanced prosthetic devices. Despite all the advancements created in prosthetic technology over the years, we still have not achieved the necessary level of functional rehabilitation or a seamless interface that allows users to truly mirror natural movement. Challenges persist in creating intuitive control strategies that can both interpret complex neural signals and translate them into fluid, multi-articulated movements. There is a need for better control strategies for these advanced prosthetic devices. Regenerative Peripheral Nerve Interface (RPNI) surgery has emerged in the field as a promising new way of enhancing prosthetic functionality. However, significant work is still needed to bridge the gap between current capabilities and the seamless, intuitive control required for naturalistic movement and true prosthetic embodiment. For continuous control, Kalman and Wiener filters have successfully translated EMG signals into smooth finger movements. In a study with rhesus macaques, a Kalman filter-based system achieved closed-loop continuous hand control using RPNI signals. For pose identification, Naïve Bayes (NB) classifiers and Hidden Markov Models combined with NB (HMM-NB) have shown high accuracy. One study reported > 96% accuracy in classifying finger movements using a NB classifier in rhesus macaques with RPNIs. In human participants, researchers decoded five different finger postures using only RPNI signals, both offline and in real time. Long-term stability of RPNI-based control has been demonstrated, with controllers maintaining high accuracy using calibration data collected up to 246 days prior. In a practical application, a human participant with RPNIs successfully completed a Coffee Making Task using four distinct grip patterns, showcasing the system’s functional utility.

Keywords

INTRODUCTION

The sudden loss of an upper limb is devastating, often resulting in functional and vocational impacts on affected individuals. In the United States alone, there are 2.3 million individuals living with limb loss, with approximately 185,000 new amputations occurring each year[1]. Clinical research has shown that user acceptance of prostheses depends mainly on the type of prosthesis, user training, and the control strategy employed[2,3]. There have been rapid advancements in the development of articulated and lifelike prosthetic limbs over the past two decades, including myoelectric prosthetics, hybrid-assistive-limb systems, exoskeletons, neural-controlled prosthetics, and robotic prosthetics[4,5]. Despite all these advancements, a seamless interface that allows users to truly mirror natural movement has yet to be developed.

Regenerative Peripheral Nerve Interface (RPNI) surgery is a procedure performed to amplify efferent motor action potentials from peripheral nerves in the residual limbs of people with limb loss, thereby enhancing prosthetic control. RPNI was developed in the Neuromuscular Laboratory at the University of Michigan. This surgical procedure involves harvesting a 3 cm × 1.5 cm × 0.5 cm autologous free skeletal muscle graft and wrapping it around the terminal end of a peripheral nerve or its individual fascicles[6]. The muscle graft, which can be harvested from the amputation site or a distant location, undergoes a process of degeneration, regeneration, and reinnervation[7]. The graft relies on imbibition in the initial stages postoperatively, followed by inosculation and ultimately revascularization[8]. During regeneration, the denervated muscle graft is reinnervated by the nerve or fascicle, leading to the formation of functional neuromuscular junctions. The peripheral nerve carries relatively low-amplitude efferent motor action potentials, which transmit signals through the newly formed neuromuscular junctions to cause RPNI muscle contraction. The peripheral nerve signals are then recorded from the RPNI, rather than directly from the peripheral nerve, to create a more favorable signal-to-noise ratio (SNR).

RPNI signals are stable over the long term, which can be attributed to several biological mechanisms. First, the reinnervated muscle graft contains functional neuromuscular junctions that remain stable over time, enabling consistent generation of compound muscle action potentials (CMAPs). Second, robust revascularization of the muscle graft ensures sustained tissue viability and prevents signal degradation over time. Together, these features create a biologically stable, self-contained unit that continues to produce high-quality, volitional signals for prosthetic control[9,10]. Electrodes implanted within the RPNI then record these amplified CMAPs, enabling precise prosthetic control[11]. The RPNI effectively acts as a bioamplifier and signal transducer for peripheral nerve signals, improving SNRs and facilitating highly specific and reliable prosthetic control.

While RPNI surgery provides an excellent method for generating control signals, the clinical challenge remains complex. For instance, the median nerve controls multiple functions at the same time, including index, middle, and ring finger flexion, as well as wrist flexion[12,13]. This results in a multitude of overlapping signals that must be accurately interpreted from a single nerve to predict the user’s intended actions. The challenge lies in interpreting these signals so that they can be used to control advanced multi-articulated prosthetic devices, presenting an opportunity for machine learning algorithms to play a crucial role. These advanced computational techniques can decode and interpret the complex nerve signals from a patient’s muscles, enhancing control strategies for more precise prosthetic operation. They can be leveraged to analyze the multifaceted signals generated by Regenerative Peripheral Nerve Interfaces (RPNIs), translating them into specific commands for advanced multi-articulated prosthetic devices. By combining the biological advantage of RPNI with the computational power of machine learning, researchers can significantly improve the performance of modern-day prosthetics. The primary objective of this narrative review is to demonstrate how machine learning algorithms have enhanced the use of prosthetics, specifically in their application to RPNI surgery, ultimately improving the quality of life for individuals suffering from upper extremity amputations. While numerous alternative control strategies exist, including targeted muscle reinnervation (TMR) and conventional myoelectric systems, this narrative review is intentionally focused on RPNIs due to their role in biologically amplifying neural signals for advanced prosthetic control.

APPLICATION OF MACHINE LEARNING IN RPNI SURGERY

Continuous control

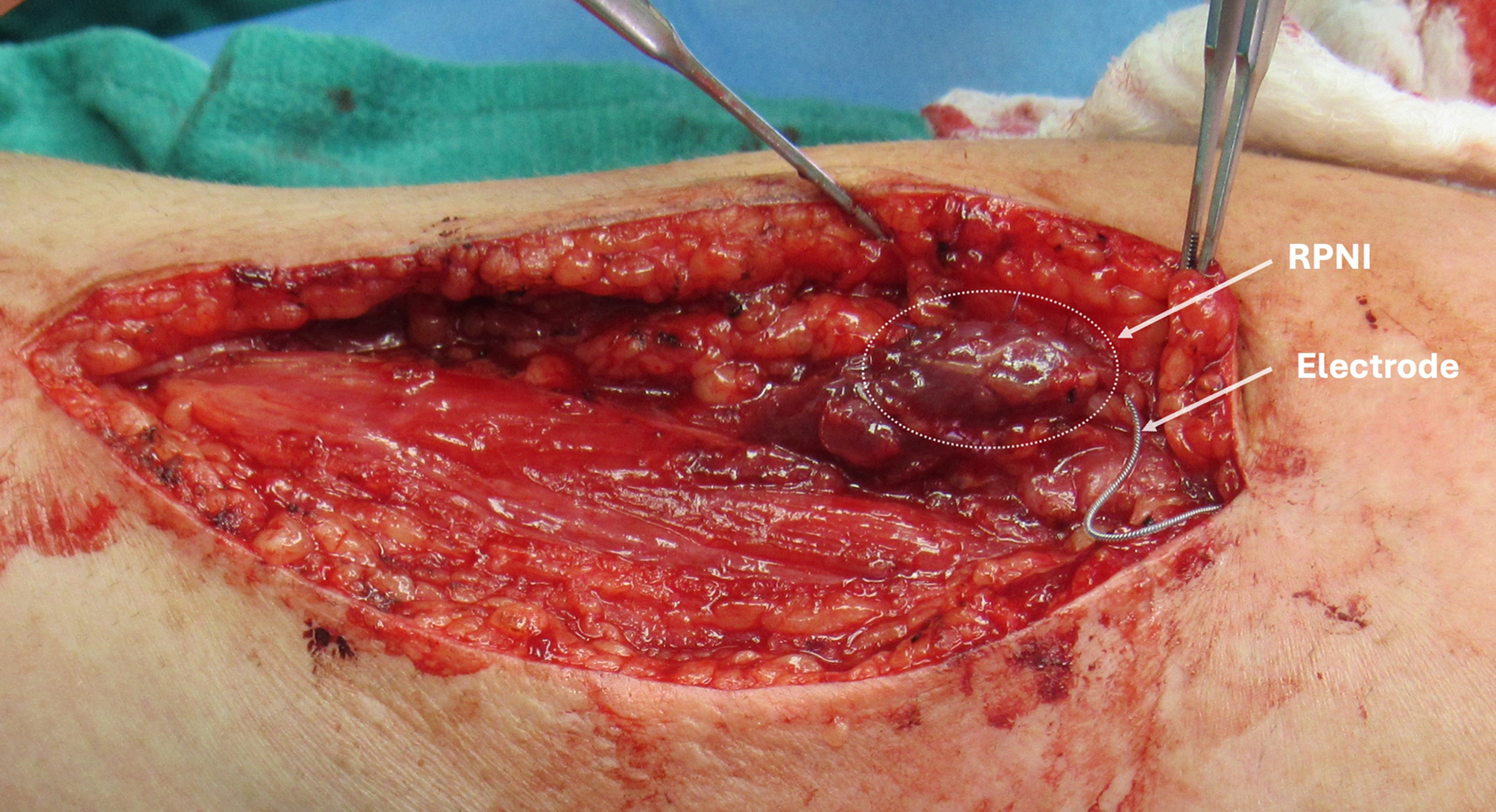

RPNIs [Figure 1] produce high-amplitude electromyography (EMG) signals with large SNRs, which are ideal for machine learning algorithms to process. To decode the EMG signals from RPNIs, researchers have applied various machine learning algorithms focusing on continuous control and pose identification. For continuous control, the Kalman filter, a recursive filter and algorithm that predicts the current state of a system (e.g., finger position) based on a model of the system’s dynamics and noisy measurements (e.g., EMG signals), has been used to translate EMG signals directly into finger movements[14-16]. It predicts finger positions using a model of hand movement dynamics, updates these predictions based on EMG signal measurements, and filters out noise to provide smooth, accurate estimates of intended movements. The Wiener filter, another method used for continuous reconstruction of movement, works differently[17]. It uses a statistical model of the system’s input and output to estimate the system’s state[18]. It can be thought of as a system that learns the patterns between input (EMG signals) and output (finger movements) and uses this knowledge to make predictions. In prosthetic applications, it uses a model of the relationship between EMG signals and finger movements to estimate the most likely finger position based on observed EMG data, minimizing the difference between the estimated and actual finger positions[16]. Both the Kalman and Wiener filters enable continuous control of prosthetic devices by translating EMG signals into smooth, accurate finger movements, helping to bridge the gap between a user’s neural signals and the physical actions of their prosthetic limb.

Figure 1. An RPNI on the radial nerve following neuroma resection. A 3 cm × 2 cm × 0.5 cm autologous muscle graft was wrapped around the terminal end of the radial nerve to create the RPNI construct (white dotted circle) with an electrode attached. RPNI: Regenerative Peripheral Nerve Interface.

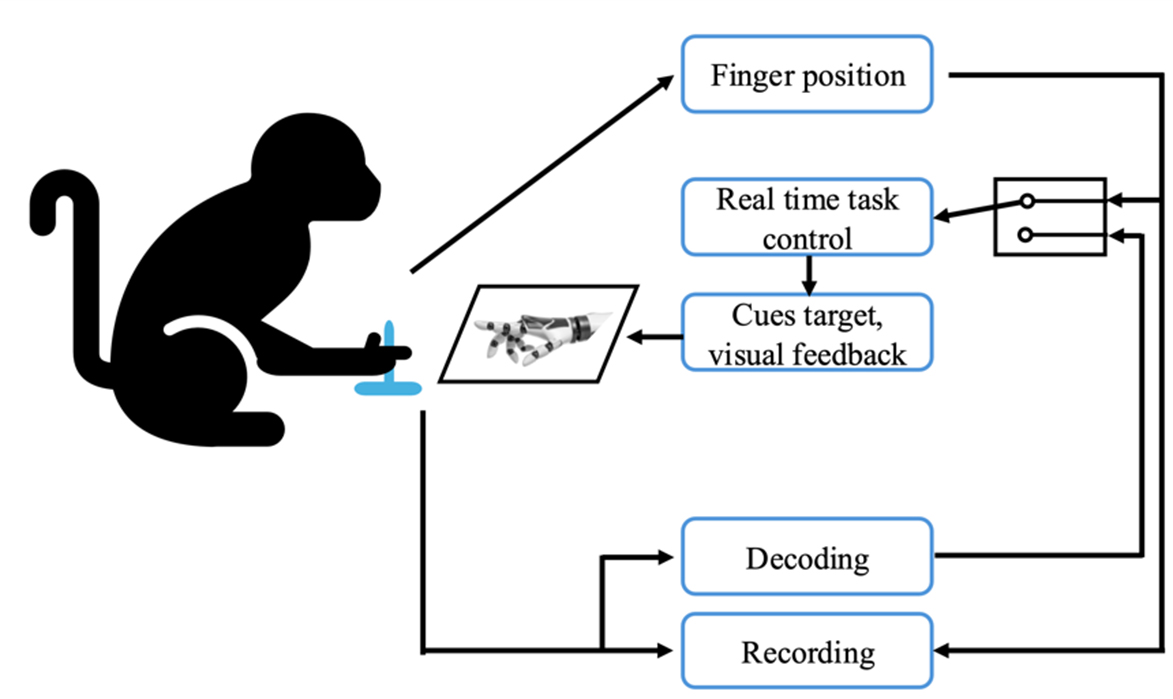

Vu et al.[7] implanted RPNIs and intramuscular bipolar electrodes (IM-MES) in two rhesus macaques to enable closed-loop continuous hand control. The non-human primates (NHPs) were trained to perform finger movement tasks, and a flex sensor was attached to the NHP’s index finger to measure finger position [Figure 2]. They hit targets on the screen by moving their fingers, and the virtual hand could be controlled by either the flex sensor or by decoding RPNI signals in real time. EMG signals from the RPNIs were recorded and filtered. A Kalman filter was used to decode continuous finger position from the EMG signals using two temporal features of the EMG waveform: mean absolute value (MAV) and waveform line length (LL). A Wiener filter was also implemented for comparison. The decoding algorithms were evaluated in offline and online closed-loop sessions. Both Kalman and Wiener filters were applied to decode continuous finger movements using RPNI signals under identical experimental conditions in rhesus macaques. While both filters enabled real-time control, the Kalman filter demonstrated superior responsiveness, requiring a shorter duration of neural data collection to generate accurate predictions. In contrast, the Wiener filter performed similarly but was more computationally intensive and less suited for real-time performance. These direct comparisons suggest that Kalman filtering may be more optimal for low-latency continuous control in RPNI-based applications.

Figure 2. The NHP controlled a virtual hand model displayed on a monitor using a flex sensor attached to the index finger, with the goal of hitting and holding a spherical target to receive a reward, while also demonstrating the capability to control the virtual hand through real-time decoding of RPNI signals. NHP: Non-human primate; RPNI: Regenerative Peripheral Nerve Interface.

Pose identification

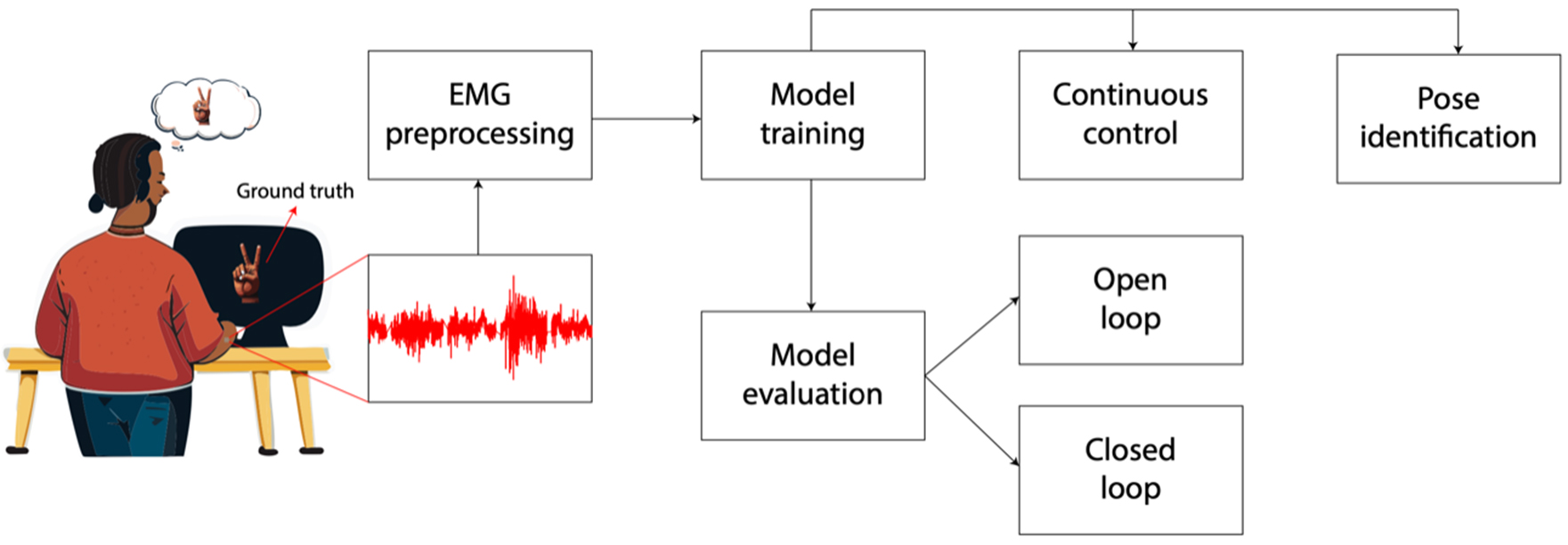

Pose identification, also known as pose estimation, is a computer vision technique that detects and tracks the position and orientation of a person’s body parts in images or video [Figure 3][19,20]. It works by identifying key body joints, reconstructing a human skeleton based on relative positions, and recognizing different postures[21]. When it comes to pose identification, algorithms such as the Naïve Bayes (NB) classifier and Hidden Markov Models (HMM) combined with Naïve Bayes (HMM-NB) have proven effective. The NB Classifier is a supervised machine learning algorithm used for classification tasks[22]. It operates on the principle of Bayes’ Theorem, which allows for the calculation of posterior probabilities and likelihood. The “naïve” aspect comes from its assumption that all features are conditionally independent of each other, which simplifies computations but may not always reflect reality[23]. In the context of pose identification, a NB classifier learns the probability of different body joint positions occurring in various poses during training[24]. When presented with a new image, it calculates the probability of each possible pose based on the observed joint positions and selects the pose with the highest probability as the classification result. This approach is particularly useful when dealing with high-dimensional data and can perform well even with relatively small training datasets[25]. HMMs are statistical models that work well for sequential data, making them suitable for analyzing pose sequences in video[26]. They are particularly suited for modeling time-series data such as EMG signals, as they can capture the temporal dependencies between consecutive data points[27]. When combined with NB, they create a powerful tool for pose identification that can account for both spatial and temporal aspects of human movement[28]. In the HMM-NB approach, each pose is treated as a hidden state in the HMM, while the observed body joint positions serve as the visible outputs[29]. The HMM learns the likelihood of transitioning from one pose to another over time, while the NB component calculates the probability of observing certain joint positions given a particular pose. This combination allows the model to consider both the current observation and the sequence of previous poses when making predictions[30]. The HMM component helps capture the temporal dependencies between consecutive poses, while the NB part handles the relationship between poses and observed joint positions[31]. This approach is particularly effective for pose identification in video sequences, as it can smooth out predictions over time and analyze noise in individual frame observations more robustly than methods that consider each frame in isolation.

Figure 3. Illustration of the process of using RPNIs and machine learning algorithms to control a prosthetic device. The subject performs a hand gesture, and the ground truth refers to the actual gesture that the subject is attempting to mirror. This serves as labeled data for training the machine learning algorithm. The subject’s muscle activity is recorded using EMG signals (red waveform). Preprocessed EMG signals, paired with their corresponding ground truth gestures, are used to train a machine learning model. After training, the model’s performance is evaluated to ensure it can accurately predict gestures based on EMG inputs. Once trained and evaluated, the model is used for real-time control of a device (e.g., a robotic hand or prosthetic) in open-loop control (the system predicts gestures but does not adjust based on feedback from the environment or the subject) or closed-loop control (the system incorporates feedback, such as visual or tactile input, to refine its predictions and improve control accuracy.) The model identifies discrete gestures or poses (e.g., peace sign, fist, open hand) based on the EMG signals. This information can be used for controlling specific actions in a prosthetic device. RPNIs: Regenerative Peripheral Nerve Interfaces; EMG: electromyography.

Irwin et al.[8] were able to classify finger movements as flexion, extension, or rest with greater than 96% accuracy in two rhesus macaques implanted with RPNIs using a NB classifier. The NHPs were implanted with RPNIs on branches of their median and radial nerves, which control finger movements. The RPNIs produced EMG signals similar to those of intact muscles, and single motor units (a single motor neuron innervating a population of individual muscle fibers) could be discriminated from all RPNIs. The NHPs were also able to control a virtual hand using their RPNI signals in both open-loop (the virtual hand mirrored the classifier output without providing the NHP visual feedback during the trial) and closed-loop (the virtual hand was controlled by the online classifier output, and the NHPs received feedback on the screen regarding the predicted movement based on their RPNI signals) settings, achieving similar performance to physical control (the virtual hand is controlled by the NHP’s actual movements). This innovation leads to more intuitive and precise prosthetic control, improves signal stability over time, and enables detailed extraction of motor intentions, enhancing both functionality and user experience.

These classifiers can quickly and accurately decode a range of hand movements, including individual finger motions, wrist actions, and various grasp patterns. Vaskov et al.[32] found that implanted electrodes recorded high-quality EMG signals from RPNIs and residual innervated forearm muscles in two persons with transradial amputations. The implant procedure targeted the same individual finger movements in both participants. Using these signals, the participants were able to control a virtual hand to distinguish individual finger, intrinsic, and grasp postures. They used a posture switching task to test real-time control. In this task, participants controlled a virtual hand and attempted to match the posture of a cue hand. The speed and accuracy of the pattern recognition system in distinguishing finger movements exceeded earlier work[33-35] that quantified real-time performance in virtual environments. In a controlled environment, the HMM-NB also distinguished a smaller set of functional postures in novel static arm positions. The participants used the high-speed pattern recognition system to control advanced robotic prostheses, eliminating the need for time-consuming grip triggers or selection schemes. The study also found that the HMM-NB consistently improved simulated performance over NB and outperformed each alternate classifier. This was because the HMM-NB could model transitions between latent states, which allowed it to rapidly issue accurate predictions. The HMM-NB model’s ability to capture temporal dynamics offered a distinct advantage in real-time applications, despite its higher computational load. The HMM-NB most noticeably improved performance for smaller processing windows, which can increase responsiveness. Specifically, for Patient 1 (P1), the simulated accuracy of HMM-NB was consistently higher than NB across various processing window lengths, indicating an improvement in accuracy. For instance, at a 50-ms processing window, HMM-NB achieved approximately 95% accuracy, while NB demonstrated 90% accuracy. This represents an approximate 5 percentage point increase in accuracy for HMM-NB over NB. Similarly, for Patient 2 (P2), HMM-NB was 94% accurate, which was consistently higher than the 85% simulated accuracy of NB. HMM-NB consistently improved simulated performance over NB (P < 0.01, paired t-test, n = 42 window lengths across 6 datasets). This improvement was particularly noticeable for smaller processing windows, which can enhance responsiveness.

RPNI-based pose identification allows patients to perform complex tasks essential for daily living. Lee et al.[36] used an HMM classifier in a human participant with a unilateral transradial amputation, who had RPNIs surgically implanted, to distinguish four functional grips (rest, fist, pinch, and point) with high accuracy. RPNI controllers maintained high accuracy using calibration data collected up to 246 days prior, showcasing the long-term stability of this approach. In a practical demonstration of prosthetic functionality, the subject engaged in a comprehensive Coffee Making Task. This task involved manipulating various objects associated with brewing coffee, including a water-filled cup (simulated with beads), a coffee pod, sugar, and a compact Keurig™ coffee maker. The exercise required the utilization of four distinct grip patterns to handle these items effectively. To assess performance, the subject executed the entire sequence without interruption, allowing for measurement of total completion time. Additionally, the task was broken down into five discrete segments, each focusing on transitioning to a specific grip pattern (e.g., forming a fist to grasp the cup), to evaluate the accuracy of individual grip transitions. This practice demonstrated the system’s utility in real-world scenarios.

To prove long-term stability of RPNIs, Vu et al.[37] used a NB classifier to decode hand postures in real time and offline. Participants were cued to a specific posture and asked to volitionally mirror the posture with their phantom limb. EMG data from only the RPNIs were used to train a NB classifier. The cue hand would instruct participants to perform a specific posture, and they had to match it using a separate virtual hand. The accuracy of the classifier was quantified by the number of correct predictions, whereas the speed of the classifier was measured by calculating the time between the EMG onset and the first correct predicted output from the classifier. They were able to decode five different finger postures in each subject, both offline and in real time, using RPNI signals. When the classifier was trained using both RPNI and residual muscle signals, researchers were able to decode four different grasping postures. Subjects successfully controlled a hand prosthesis in real time up to 300 days without control algorithm recalibration, showing the potential of RPNIs for clinical translation. These findings highlight the long-term signal stability of RPNIs, which is the result of stable NMJs and robust vascularization over time. These features collectively support durable signal amplitude and decoding accuracy over extended periods, reinforcing the role of RPNIs as a reliable interface for neural prosthetic control.

Clinical integration

By combining novel surgical techniques with machine learning algorithms, researchers have achieved more intuitive, precise, and stable prosthetic control. Patients can control prosthetic devices more naturally by simply thinking about the desired movement. This intuitive control reduces the cognitive load on users and makes prosthetic use more seamless in everyday life. The ability to distinguish between multiple hand postures enables the use of advanced multi-articulated prosthetic hands. Patients can perform a wider range of movements, including individual finger control, enhancing the functionality of their prosthetic devices. The system can be used in virtual environments for patient training and rehabilitation. This allows patients to practice and improve their control over the prosthetic device in a safe, controlled setting before using it in daily life. The ability to decode multiple finger postures and grasping patterns enables personalized prosthetic programming. This customization can be tailored to each patient’s specific needs and lifestyle requirements. The precise control offered by RPNI-based pose identification can be integrated into occupational therapy programs, helping patients regain independence in work-related tasks and potentially facilitating return to employment. To address individual patient variability and signal drift in clinical applications, adaptive algorithms have been proposed. For example, Kalman filters can be tuned to individual patients by incorporating user-specific calibration data during initial training, followed by periodic recalibration sessions to update model parameters[7,38,39]. Alternatively, using adaptive or extended Kalman filters allows the algorithm to adjust to changing signal dynamics in real time[40,41]. Machine learning models such as HMM-NB can be trained with small amounts of user-specific data and improved incrementally with user feedback[42]. These approaches aim to reduce the burden of frequent recalibration and improve the long-term usability of prosthetic systems in daily life. As research in this field progresses, the integration of machine learning with RPNI technology provides a way to bridge the gap between biological intent and prosthetic action, potentially revolutionizing the field of neuroprosthetics. For clarity, we summarized important aspects of each machine learning algorithm in an overview table [Table 1].

List of machine learning algorithms

| Algorithm | Application | Strength | Limitation | Challenges in clinical integration |

| Kalman Filter | Continuous Control | Smooth, accurate finger movement predictions; real-time control | Requires detailed system dynamics model; may struggle with non-linear or highly dynamic changes | Requires precise modeling of individual dynamics; may lose accuracy over time due to signal instability |

| Wiener Filter | Continuous control | Effective for continuous movement reconstruction; statistical learning from input-output pairs | Longer history of neural data required | Needs frequent recalibration to handle signal drift |

| Naïve Bayes (NB) | Pose identification | High accuracy for small datasets; simple and computationally efficient | Assumes conditional independence, which may not hold in complex systems | Limited by oversimplified assumptions; struggles with noisy or overlapping signal patterns |

| Hidden Markov Models (HMM) | Pose identification | Captures temporal dependencies in sequential data | Computationally intensive; requires substantial training data for accurate modeling | High computational demands may limit real-time use; sensitive to variations in signal quality |

| HMM-NB | Pose identification | Combines spatial and temporal analysis for robust predictions | Complexity increases with added hidden states and larger datasets | Complexity of integration with prosthetics; requires large datasets for training robust models |

CONCLUSION

The use of machine learning has made significant and positive impacts on the field of upper limb rehabilitation. New possibilities now exist for enhancing prosthetic control and functionality by decoding complex EMG signals and translating them into natural prosthetic movements for more intuitive control of prosthetic devices. This has not only allowed for multi-articulated movements but also improved user experiences. Despite these advancements, several challenges remain, including the need for more robust algorithms, improved long-term stability of neural interfaces, and enhanced sensory feedback mechanisms. Future research should focus on developing more sophisticated machine learning algorithms that can adapt to individual user patterns over time and can improve the integration of sensory feedback in prosthetic systems. Although the current literature is largely composed of early-stage studies with small sample sizes, future investigations should aim to improve generalizability by including larger, more diverse patient cohorts. Stratified analyses or multicenter studies could help clarify how these factors impact the effectiveness and reliability of RPNI-based control systems. Beyond current applications of traditional classifiers and filters, future directions in this field will likely be driven by advanced artificial intelligence approaches such as deep learning and reinforcement learning. Deep learning, particularly convolutional and recurrent neural networks, offers the capacity to extract complex, high-dimensional features from EMG and RPNI signals that may be inaccessible to conventional algorithms. Reinforcement learning, in contrast, provides a framework for adaptive, closed-loop training in which prosthetic systems continuously refine their performance through interaction with the user and environment. Together, these approaches could enable prosthetic devices that not only decode user intent with higher fidelity but also adapt dynamically to changes in signal quality, user behavior, and task context. Incorporating these techniques into future clinical trials will be critical for achieving more robust, intuitive, and scalable prosthetic control systems. Another critical barrier to translation is the current lack of standardized outcome measures for evaluating prosthetic control strategies. Across published studies, metrics range from offline decoding accuracy to task-specific functional tests (e.g., Box and Block Test, Clothespin Relocation, or custom tasks such as the Coffee Making Task). While these provide valuable insights, the heterogeneity makes it difficult to compare results across aggregate data for meta-analyses. Establishing a consensus on clinically meaningful endpoints, such as long-term functional independence, user satisfaction, and quality-of-life metrics, may be essential for regulatory approval, reimbursement, and widespread adoption. The development of standardized benchmarks and shared datasets, as has been done in other fields of medical AI, could accelerate translation by enabling direct comparison of algorithmic performance and prosthetic integration across diverse patient populations.

LIMITATIONS

While these studies show the promising potential of RPNIs combined with machine learning algorithms, many remain proof-of-concept with limited sample sizes. As such, further validation in larger, more diverse populations is essential for establishing clinical generalizability. Future work should focus on larger, multicenter trials with more heterogeneous cohorts to enhance reproducibility and ensure the applicability of these systems across a wider range of individuals with limb loss. Additionally, the long-term stability of RPNI signals, while impressive, may not account for variations in signal patterns across different patient populations or over even longer periods. This could lead to algorithms that perform well for some users but less optimally for others. To mitigate these potential biases, future research should focus on expanding the diversity of participants in RPNI studies, considering factors such as age, gender, and amputation type. Furthermore, developing adaptive algorithms that can continuously learn and adjust to individual user patterns over extended periods could help address potential biases and improve the generalizability of RPNI-based prosthetic control systems.

DECLARATIONS

Acknowledgments

The Authors would like to thank Alex Vaskov, PhD, for providing surgical images, flow diagrams, and expertise in mechanical engineering and machine learning algorithms.

Authors’ contributions

Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Project administration, Resources, Supervision, Validation, Writing - original draft, Writing - review & editing: Wang MJ

Validation, Writing - original draft, Writing - review & editing: Cubillos LH

Supervision, Validation, Writing - original draft, Writing - review & editing: Kung TA, Kemp SWP, Snyder-Warwick AK

Corresponding Author, Conceptualization, Supervision, Validation, Writing - original draft, Writing - review & editing: Cederna PS

Availability of data and materials

Not applicable.

Financial support and sponsorship

None.

Conflicts of interest

Cederna PS, serves as President of Blue Arbor Technologies, Inc., a company that designs, manufactures, and produces neural prosthetic control systems. None of the Blue Arbor Technology products is discussed in this article. No funding was received from any source for this article.

Kung TA, serves as Chief Medical Officer of Blue Arbor Technologies, Inc., a company that designs, manufactures, and produces neural prosthetic control systems. None of the Blue Arbor Technology products is discussed in this article. No funding was received from any source for this article.

Snyder-Warwick AK has no disclosures relevant to this work. She has received research funding from Checkpoint Surgical.

Kemp SWP, Wang MJ and Cubillos LH have no disclosures.

Ethical approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Copyright

© The Author(s) 2025.

REFERENCES

1. Amputee Coalition. 5.6 million++ Americans are living with limb loss and limb difference: new study published. Amputee Coalition (Washington, DC), February 14, 2024. Available from https://amputee-coalition.org/5-6-million-americans-living-with-limb-loss-limb-difference/ [accessed 10 October 2025].

2. Biddiss E, Chau T. The roles of predisposing characteristics, established need, and enabling resources on upper extremity prosthesis use and abandonment. Disabil Rehabil Assist Technol. 2007;2:71-84.

3. Bosman CE, van der Sluis CK, Geertzen JHB, Kerver N, Vrieling AH. User-relevant factors influencing the prosthesis use of persons with a transfemoral amputation or knee-disarticulation: a meta-synthesis of qualitative literature and focus group results. PLoS One. 2023;18:e0276874.

4. Van Der Riet D, Stopforth R, Bright G, et al. An overview and comparison of upper limb prosthetics. In: 2013 Africon; 2013 Sep 9-12; Pointe aux Piments, Mauritius. New York: IEEE; 2014. pp. 1-8.

5. Toro-Ossaba A, Tejada JC, Sanin-Villa D. Myoelectric control in rehabilitative and assistive soft exoskeletons: a comprehensive review of trends, challenges, and integration with soft robotic devices. Biomimetics. 2025;10:214.

6. Santosa KB, Oliver JD, Cederna PS, et al. Regenerative Peripheral Nerve Interfaces for prevention and management of neuromas. Clin Plast Surg. 2020; 47:311-21.

7. Dumont NA, Bentzinger CF, Sincennes MC, et al. Satellite cells and skeletal muscle regeneration. Compr Physiol. 2015;5:1027-59.

8. Chávez OHG, Castillo AG, Mariscal SC, et al. Quick review and technical approach for Regenerative Peripheral Nerve Interface surgery. MPS. 2023;13:126-31.

9. Vu PP, Irwin ZT, Bullard AJ, et al. Closed-loop continuous hand control via chronic recording of Regenerative Peripheral Nerve Interfaces. IEEE Trans Neural Syst Rehabil Eng. 2018;26:515-26.

10. Irwin ZT, Schroeder KE, Vu PP, et al. Chronic recording of hand prosthesis control signals via a Regenerative Peripheral Nerve Interface in a rhesus macaque. J Neural Eng. 2016;13:046007.

11. Kung TA, Langhals NB, Martin DC, Johnson PJ, Cederna PS, Urbanchek MG. Regenerative Peripheral Nerve Interface viability and signal transduction with an implanted electrode. Plast Reconstr Surg. 2014;133:1380-94.

12. Planitzer U, Steinke H, Meixensberger J, Bechmann I, Hammer N, Winkler D. Median nerve fascicular anatomy as a basis for distal neural prostheses. Ann Anat. 2014;196:144-9.

13. Murphy KA, Morrisonponce D. Anatomy, Shoulder and Upper Limb, Median Nerve. Nih.gov. Published January 12, 2020. Available from: https://www.ncbi.nlm.nih.gov/books/NBK448084/. [Last accessed on 12 Sep 2025].

14. Kim SP, Simeral JD, Hochberg LR, Donoghue JP, Black MJ. Neural control of computer cursor velocity by decoding motor cortical spiking activity in humans with tetraplegia. J Neural Eng. 2008;5:455-76.

15. Wu W, Gao Y, Bienenstock E, Donoghue JP, Black MJ. Bayesian population decoding of motor cortical activity using a Kalman filter. Neural Comput. 2006;18:80-118.

16. Kalman RE. A new approach to linear filtering and prediction problems. J Basic Eng. 1960;82:35-45.

17. Hardie R. A fast image super-resolution algorithm using an adaptive Wiener filter. IEEE Trans Image Process 2007;16:2953-64.

18. Jarrah YA, Grace AM, Samuel OW, et al. Enhancement of upper limb movement classification based on wiener filtering technique. In: 2020 IEEE International Conference on E-health Networking, Application & Services (HEALTHCOM); 2021 Mar 1-2; Shenzhen, China. New York: IEEE; 2021. pp. 1-6.

19. Avogaro A, Cunico F, Rosenhahn B, Setti F. Markerless human pose estimation for biomedical applications: a survey. Front Comput Sci. 2023;5:1153160.

20. Toshev A, Szegedy C. DeepPose: human pose estimation via deep neural networks. In: 2014 IEEE Conference on Computer Vision and Pattern Recognition; 2014 Jun 23-38; Columbus, OH, USA. New York: IEEE; 2014. pp. 1653-60.

21. Desmarais Y, Mottet D, Slangen P, Montesinos P. A review of 3D human pose estimation algorithms for markerless motion capture. Comput Vis Image Und. 2021;212:103275.

22. John GH, Langley P. Estimating continuous distributions in bayesian classifiers. arXiv. 2013;arXiv:13024964. Available from: [accessed 12 September 2025].

23. Navone HD, Cook D, Downs T, Chen D. Boosting Naive-Bayes classifiers to predict outcomes for hip prostheses. In: IJCNN’99. International Joint Conference on Neural Networks. Proceedings (Cat. No.99CH36339); 1999 Jul 10-16; Washington, DC, USA. New York: IEEE; 1999. pp. 3622-6.

24. Yang X, Tian YL. EigenJoints-based action recognition using Naïve-Bayes-Nearest-Neighbor. In: 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops; 2012 Jun 16-21; Providence, RI, USA. New York: IEEE; 2012. pp. 14-9.

25. Galata A, Johnson N, Hogg D. Learning variable-length markov models of behavior. Comput Vis Image Und. 2001;81:398-413.

26. Oliver NM, Rosario B, Pentland A. A Bayesian computer vision system for modeling human interactions. IEEE Trans Pattern Anal Machine Intell. 2000;22:831-43.

27. Malešević N, Marković D, Kanitz G, Controzzi M, Cipriani C, Antfolk C. Decoding of individual finger movements from surface EMG signals using vector autoregressive hierarchical hidden Markov models (VARHHMM). In: 2017 International Conference on Rehabilitation Robotics (ICORR); 2017 Jul 17-20; London, UK. New York: IEEE;2017. pp. 1518-23.

28. Yamato J, Ohya J, Ishii K. Recognizing human action in time-sequential images using hidden Markov model. IEEE Xplore. 1992;92:379-85.

29. Yoon BJ. Hidden markov models and their applications in biological sequence analysis. Curr Genomics. 2009;10:402-15.

30. Avilés-arriaga H, Sucar-succar L, Mendoza-durán C, Pineda-cortés L. A comparison of dynamic naive bayesian classifiers and hidden. JART. 2011;9:81-102.

31. Liu J, Ying D, Rymer WZ. EMG burst presence probability: a joint time-frequency representation of muscle activity and its application to onset detection. J Biomech. 2015;48:1193-7.

32. Vaskov AK, Vu PP, North N, et al. Surgically implanted electrodes enable real-time finger and grasp pattern recognition for prosthetic hands. IEEE Trans Robot. 2022;38:2841-57.

33. Kuiken TA, Li G, Lock BA, et al. Targeted muscle reinnervation for real-time myoelectric control of multifunction artificial arms. JAMA. 2009;301:619-28.

34. Li G, Schultz AE, Kuiken TA. Quantifying pattern recognition-based myoelectric control of multifunctional transradial prostheses. IEEE Trans Neural Syst Rehabil Eng. 2010;18:185-92.

35. Cipriani C, Antfolk C, Controzzi M, et al. Online myoelectric control of a dexterous hand prosthesis by transradial amputees. IEEE Trans Neural Syst Rehabil Eng. 2011;19:260-70.

36. Lee C, Vaskov AK, Gonzalez MA, et al. Use of Regenerative Peripheral Nerve Interfaces and intramuscular electrodes to improve prosthetic grasp selection: a case study. J Neural Eng. 2022;19:066010.

37. Vu PP, Vaskov AK, Irwin ZT, et al. A Regenerative Peripheral Nerve Interface allows real-time control of an artificial hand in upper limb amputees. Sci Transl Med. 2020;12:eaay2857.

38. Garcia GP, Nitta K, Lavieri MS, et al. Using Kalman filtering to forecast disease trajectory for patients with normal tension glaucoma. Am J Ophthalmol. 2019;199:111-9.

39. Zhalechian M, Van Oyen MP, Lavieri MS, et al. Augmenting Kalman filter machine learning models with data from OCT to predict future visual field loss: an analysis using data from the african descent and glaucoma evaluation study and the diagnostic innovation in glaucoma study. Ophthalmol Sci. 2022;2:100097.

40. Foussier J, Teichmann D, Jia J, Misgeld B, Leonhardt S. An adaptive Kalman filter approach for cardiorespiratory signal extraction and fusion of non-contacting sensors. BMC Med Inform Decis Mak. 2014;14:37.

41. Li Z, O’Doherty JE, Lebedev MA, Nicolelis MA. Adaptive decoding for brain-machine interfaces through Bayesian parameter updates. Neural Comput. 2011;23:3162-204.

Cite This Article

How to Cite

Download Citation

Export Citation File:

Type of Import

Tips on Downloading Citation

Citation Manager File Format

Type of Import

Direct Import: When the Direct Import option is selected (the default state), a dialogue box will give you the option to Save or Open the downloaded citation data. Choosing Open will either launch your citation manager or give you a choice of applications with which to use the metadata. The Save option saves the file locally for later use.

Indirect Import: When the Indirect Import option is selected, the metadata is displayed and may be copied and pasted as needed.

About This Article

Special Topic

Copyright

Data & Comments

Data

Comments

Comments must be written in English. Spam, offensive content, impersonation, and private information will not be permitted. If any comment is reported and identified as inappropriate content by OAE staff, the comment will be removed without notice. If you have any queries or need any help, please contact us at [email protected].