fig3

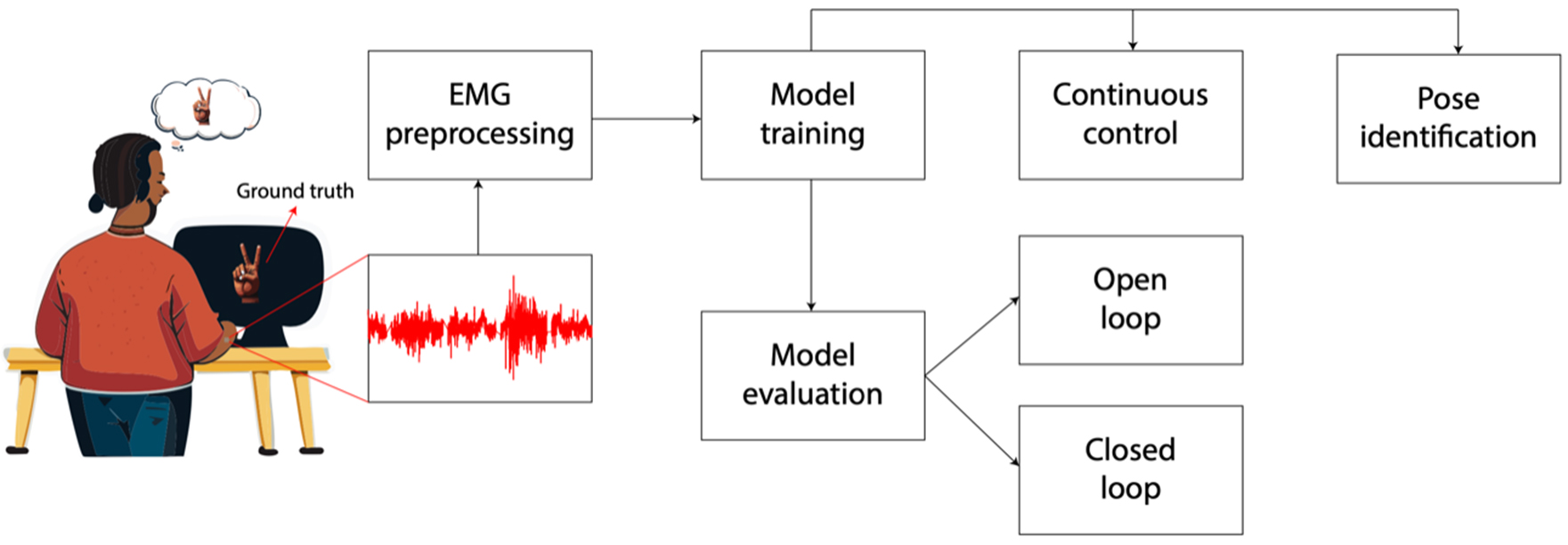

Figure 3. Illustration of the process of using RPNIs and machine learning algorithms to control a prosthetic device. The subject performs a hand gesture, and the ground truth refers to the actual gesture that the subject is attempting to mirror. This serves as labeled data for training the machine learning algorithm. The subject’s muscle activity is recorded using EMG signals (red waveform). Preprocessed EMG signals, paired with their corresponding ground truth gestures, are used to train a machine learning model. After training, the model’s performance is evaluated to ensure it can accurately predict gestures based on EMG inputs. Once trained and evaluated, the model is used for real-time control of a device (e.g., a robotic hand or prosthetic) in open-loop control (the system predicts gestures but does not adjust based on feedback from the environment or the subject) or closed-loop control (the system incorporates feedback, such as visual or tactile input, to refine its predictions and improve control accuracy.) The model identifies discrete gestures or poses (e.g., peace sign, fist, open hand) based on the EMG signals. This information can be used for controlling specific actions in a prosthetic device. RPNIs: Regenerative Peripheral Nerve Interfaces; EMG: electromyography.