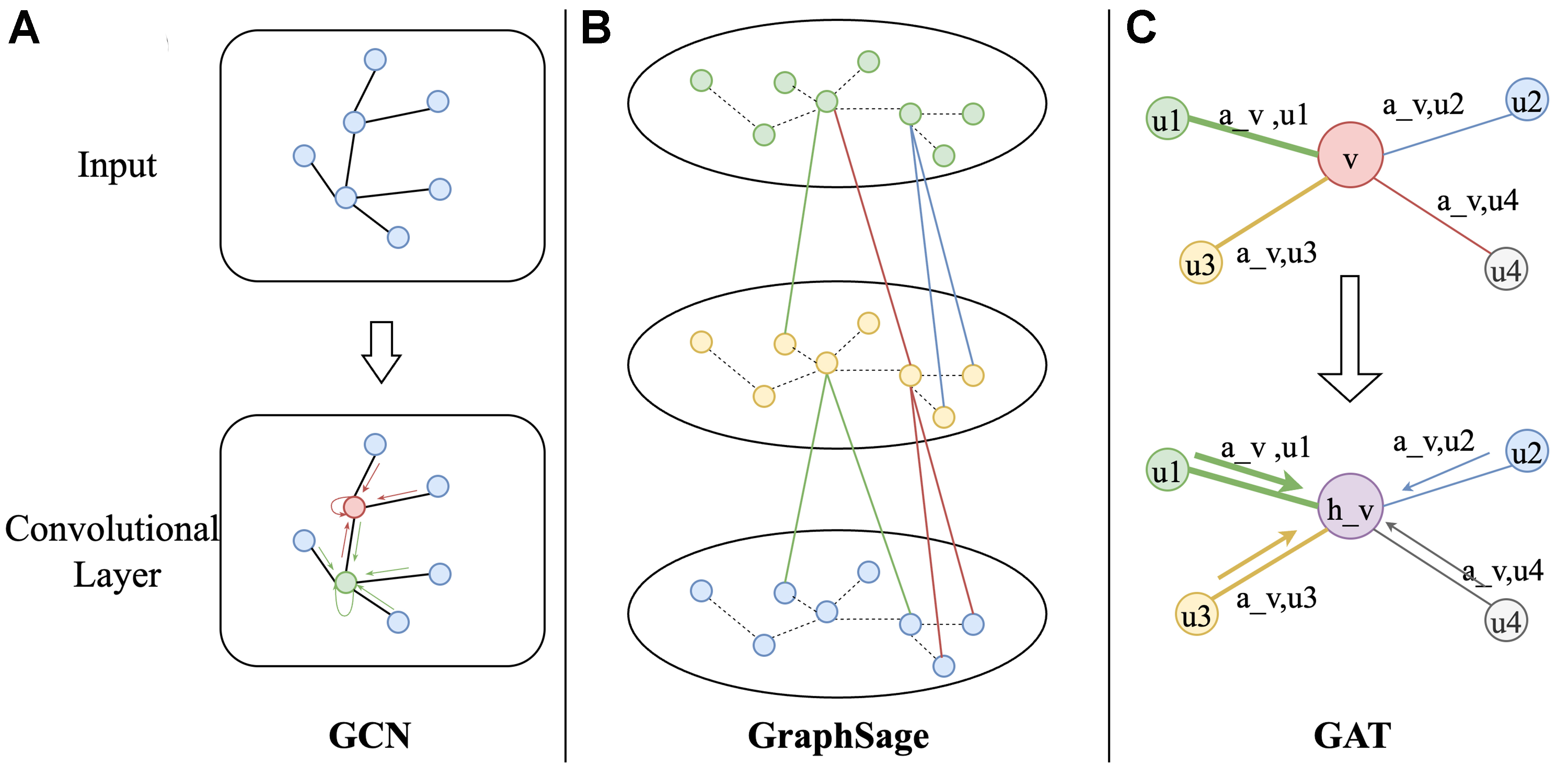

fig3

Figure 3. Illustration of common GNN architectures. (A) GCN performs spectral convolution, aggregating normalized features from neighboring nodes with shared trainable weights; (B) GraphSAGE samples a fixed-size set of neighbors and aggregates their features (e.g., mean or max-pool) to build updated node representations; (C) GAT assigns attention coefficients to each neighbor, enabling the model to weigh their contributions adaptively during aggregation. GNN: Graph neural network; GCN: graph convolutional network; GAT: graph attention network.