AI-powered medical imaging for ventral hernia repair

Abstract

Ventral hernia repair (VHR) is the surgical restoration of abdominal wall integrity to correct hernia defects and prevent recurrence. Artificial intelligence (AI) has emerged as a transformative tool in medical imaging, offering novel solutions to enhance the workflow and outcomes in VHR. This manuscript explores AI-driven applications in imaging for VHR, focusing on preoperative risk stratification, intraoperative augmented reality guidance, and postoperative wound monitoring. AI imaging models have demonstrated efficacy in preoperatively predicting hernia formation, optimizing surgical planning, and predicting complications. Recent advancements, including convolutional neural networks and real-time object detection models, have shown promise in automating wound assessment and streamlining clinical workflow. Still, there are notable challenges in AI imaging, such as dataset bias, high computational demands, and model interpretability. Future work should prioritize dataset diversity, computational efficiency, and explainable AI to ensure equitable, scalable, and clinically reliable AI imaging integration for VHR.

Keywords

INTRODUCTION

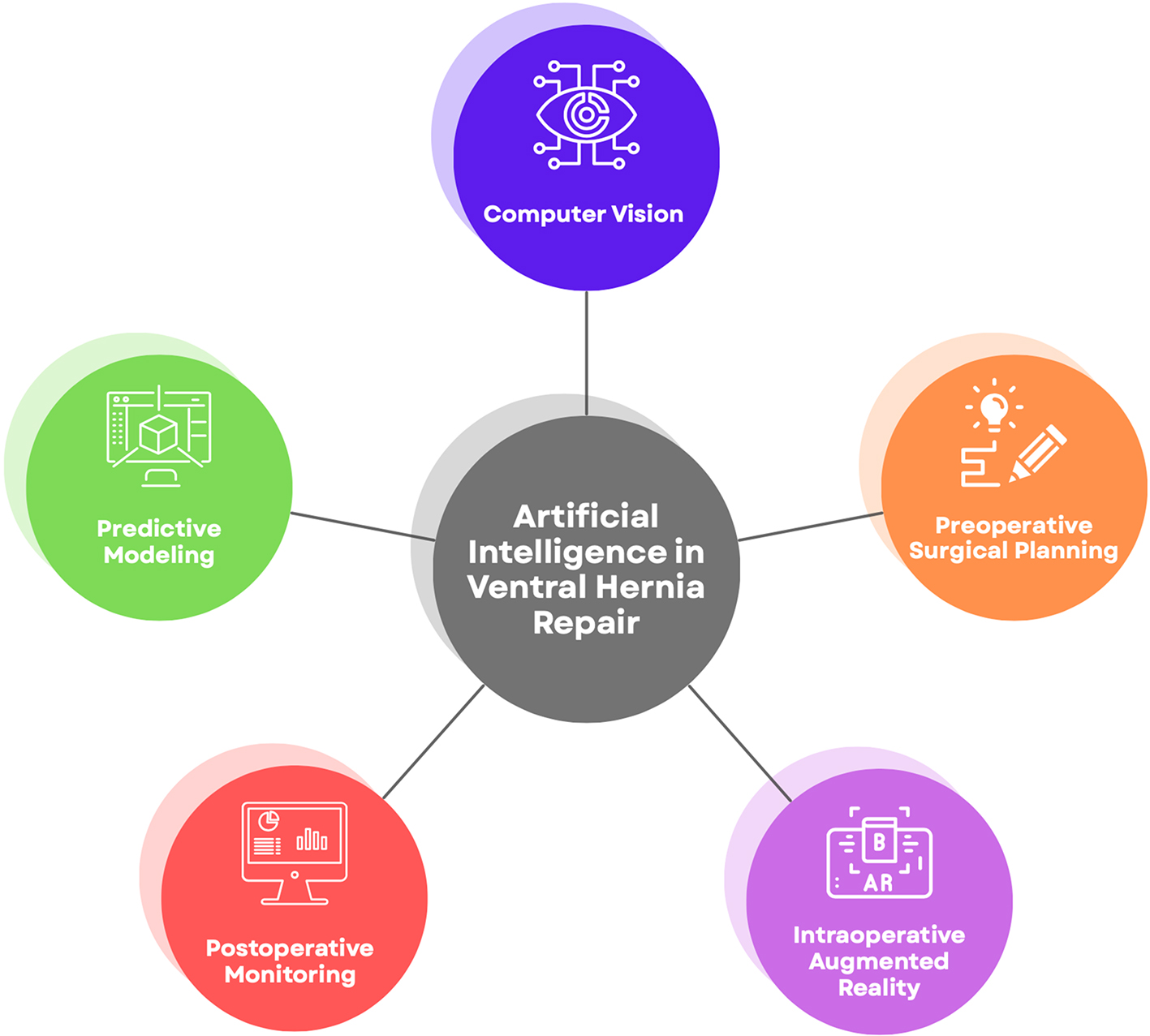

Ventral hernia repair (VHR) is a surgical procedure aimed at restoring the integrity of the abdominal wall following hernia formation, aiming to restore the native abdomen’s structure and function. These procedures demand precise preoperative planning, accurate intraoperative execution, and effective postoperative monitoring. Recent advancements in artificial intelligence (AI), particularly in image analysis and computer vision (CV), have the potential to revolutionize medical imaging and enhance outcomes in VHR. By leveraging AI algorithms, clinicians can gain deeper insights from medical imaging data, improve risk stratification, and streamline decision-making processes. The purpose of this manuscript is to highlight both the current applications of AI in medical image analysis for VHR and the future potential of this field [Figure 1].

APPLICATIONS

Understanding pathophysiology

Incisional hernias (IH) are a common, morbid, long-term complication after abdominal surgery. They occur in approximately 1%-10% of all abdominal surgeries, and they are increasingly common in the United States and globally[1,2]. Advancements in artificial intelligence (AI) present an opportunity to deepen the understanding of hernia pathophysiology, an area that remains incompletely understood[3].

McAuliffe et al. utilized preoperative computed tomography (CT) imaging to train a deep learning (DL) model to identify linear and volumetric measurements of viscera, adipose, and muscle in the abdominopelvic cavity. Their work showed that widening of the rectus complex, increased visceral volume, and abdominopelvic skeletal muscle atrophy were associated with the eventual development of IH[4]. These findings suggest a common pathophysiologic mechanism for developing ventral hernia, rooted in increased intra-abdominal volume or pressure against a weakened abdominal wall.

Surgeons can use this work to preoperatively assess radiological images to evaluate the risk of developing hernia. In their follow-up work, Talwar et al. used an extended dataset and employed three machine learning (ML) techniques - Ensemble Boosting, Random Forest, and support vector machine - to identify optimal CT-based biomarkers (OBMs) that could predict IH formation[3]. The study found a parsimonious model of three OBMs achieved a predictive accuracy of 0.83, underscoring the potential of AI to synthesize imaging data and identify patterns linked to hernia formation[3]. These findings lay the groundwork for future studies using preoperative CT scans for risk stratification in VHR.

Preoperative surgical planning for VHR

Advancements in AI-based imaging have demonstrated significant potential in enhancing preoperative planning for VHR.

Love et al. introduced a method to predict the need for additional myofascial release (AMR) during open Rives-Stoppa hernia repair based on the rectus width to hernia width ratio (RDR) measured from CT scans[5]. Their approach relied on manual measurements of key anatomical features and was shown to be a practical tool, showing a linear decrease in likelihood of requiring AMR as RDR increased[5]. Though useful for planning, 10% of patients with the highest RDR still required AMR. Additionally, the model failed to account for key biomechanical features, like the elasticity of the abdominal wall, underscoring the need for tools that leverage AI and CV to provide advanced insights into surgical planning.

Building on this foundation, Elhage et al. applied DL models to enhance preoperative planning for patients undergoing abdominal wall reconstruction (AWR), a procedure to restore complex abdominal hernias. They developed three convolutional neural network (CNN) models to predict the need for component separation, surgical site infections (SSIs), and pulmonary complications[6,7]. CNNs consist of multiple layers that process images; the initial layers detect simple patterns, such as contrast differences, while deeper layers identify more complex structures, like shapes[8]. This hierarchical feature extraction enables CNNs to recognize anatomical structures, making them well-suited for image analysis.

Using a dataset of 369 patients and 9,303 preoperative CT images, they trained the models with an 80/20 split, classifying each image as “yes” or “no” for each prediction task[6]. The models then generated a probability score (0-1), reflecting the likelihood of each outcome. Elhage et al. found that the CNN models outperformed surgeons in predicting the need for component separation and accurately identified SSIs[6]. However, the models struggled with detecting pulmonary complications, likely due to the small sample size of rare outcomes. To address these limitations, the authors proposed architectural refinements to improve model efficacy.

In a follow-up study, the same group used a generative adversarial network anomaly (GANomaly) model to improve predictions for rare complications following AWR. It was trained on an expanded dataset of 510 patients with 10,004 CT images. The GANomaly model generated synthetic CT images to better represent low-frequency events such as mesh infection and pulmonary failure[9]. Compared to the prior model, the GANomaly model improved sensitivity, increasing from 25% to 68% for mesh infection and 27% to 73% for pulmonary failure[9]. The model’s predictive ability improved from 0.61 to 0.73 for mesh infection and 0.59 to 0.70 for pulmonary failure[9]. However, precision declined from 93% to 78% for mesh infection and 92% to 67% for pulmonary failure[9]. This highlights a trade-off: while sensitivity improved for rare complications, the risk of overdiagnosis increased, a useful lesson for future AI development.

To further refine predictive modeling, Wilson et al. compared three DL models - one trained on structured clinical data alone, one trained on CT imaging, and one combining both datasets to predict complications[10]. The clinical-only DL model, incorporating variables such as age, BMI, diabetes history, and tobacco use, outperformed both the image-only and mixed models[10]. However, the researchers noted that the small sample size and unbalanced dataset likely contributed to the underperformance of the image-based models in predicting hernia recurrence[10]. Future work should focus on identifying image-based features that enhance predictive accuracy and ensuring the use of larger, more diverse datasets for model training. Despite these challenges, DL applications in VHR should be further explored to augment preoperative planning. Table 1 summarizes the studies reviewed in this section, comparing model types, tasks, training data, and key findings.

Models for preoperative surgical planning

| Study | Model type | Task | Training data | Key findings |

| Love et al. 2021[5] | Manual computation | Predict need for AMR during Rives-Stoppa hernia repair | 342 CT scans (1 axial slice per patient); all images used for statistical analysis | Rectus width to hernia width ratio inversely correlated with need for AMR |

| Elhage et al. 2021[6] | Image-based deep learning | Predict surgical complexity and outcomes in abdominal wall reconstruction | ~7,400 CT images | Conventional neural network outperformed surgeons in predicting surgical complexity; struggled with predicting rare complications |

| Ayuso et al. 2023[9] | GANomaly deep learning | Predict surgical complexity and outcomes in AWR | ~8,000 CT images | Improved sensitivity (25% to 68% mesh infection, 27% to 73% pulmonary failure); precision declined |

| Wilson et al. 2024[10] | Image-based deep learning | Predict hernia recurrence; image-only, clinical data-only, and mixed models | ~152 composite images (each created by averaging multiple axial slices containing the hernia) | Clinical-only model outperformed others; image models underperformed due to small, unbalanced dataset |

Intraoperative decision support

Augmented reality (AR) systems that integrate imaging and AI offer an avenue for improving intraoperative decision making in VHR. While the use of AR in VHR is nascent, real-time AI-based imaging has shown success in related surgical applications, highlighting its potential for future integration.

Bamba et al. developed a CNN-based CV system for real-time detection of the GI tract, blood, vessels, gauze, and clips[11]. The system was trained on annotated surgical videos from various procedures, including colectomy, hernia repair, and sigmoid resection, with experts manually labeling each visible tool and anatomical object[11]. A total of 1,070 images were utilized for training. The model, developed using IBM Virtual Insights, demonstrated a mean sensitivity and positive predictive value (PPV) of 92% and 80%, respectively[11]. When identifying blood vessels - anatomy of particular relevance to VHR - the model achieved sensitivity and PPV of 79.3% and 82.1%, respectively[11]. Notably, however, the group noted that the CNN model frequently misclassified reddish fat as blood vessels, suggesting the need for further refinement.

The study represents a significant step toward real-time AI-based imaging in intraoperative settings. A key limitation of this system is that it was trained on endoscopic images, which differ from the open surgical approach used in VHR. While the model performs well in confined endoscopic settings, its efficacy in open surgeries, where lighting conditions and perspectives are variable, remains unverified. To address these limitations, hybrid imaging approaches that integrate infrared or depth-sensing modalities may enhance anatomic differentiation. By building upon current advancements, AI-powered imaging can significantly enhance intraoperative decision making and improve patient outcomes.

Postoperative monitoring after VHR

AI-based imaging applications have the potential to enhance postoperative monitoring following VHR by detecting wound complications[12-15]. Rochon et al. developed an AI-powered tool to identify surgical wounds requiring priority review[15]. The AI utilized was “You Only Look Once’ (YOLO), an object detection algorithm known for real-time processing capabilities[15]. While YOLO requires significant computational power, it offers faster processing than traditional CNN models. It was trained on 37,974 images and tested on 3,634 images[15]. The model categorized images based on the presence of four factors: discoloration, unexpected fluids or tissues, retained sutures or clips, and incisional separation.

The model demonstrated an overall sensitivity of 89% and 100% intra-rater reliability in identifying wounds requiring urgent review[15]. However, accuracy fell for detecting incision separation and skin discoloration in darker skin tones, likely due to dataset imbalance (71% lighter skin, 29% darker skin tones)[15]. YOLO’s high computational demands may also limit adoption. Despite these limitations, AI-driven wound assessment presents a promising avenue for postoperative VHR monitoring.

Another study explored the use of mobile thermal imaging and deep learning to detect SSIs following cesarean section surgery in Rwanda, aiming to develop an accessible postoperative monitoring tool for resource-limited settings[16]. Two CNN models, a naïve CNN (baseline) and ResNet50 (pre-trained for feature extraction), were trained on 500 thermal wound images, which were manually labeled by a physician[16]. These images were captured using a mobile thermal imaging device, which records heat patterns emitted from the wound site. The naïve CNN achieved an accuracy of 0.86, while ResNet50 demonstrated an accuracy of 0.94[16]. The study was limited by the small number of SSI-positive images, which may have affected the generalizability of the model. Expanding the dataset could improve model performance and advance the development of a cost-effective postoperative monitoring modality.

CHALLENGES AND LIMITATIONS

AI-based imaging shows promise in VHR but faces barriers to widespread clinical adoption[17,18]. A Lancet editorial highlights the need for high-quality datasets to minimize bias to prevent innovations from exacerbating healthcare disparities in resource-limited settings[19]. These populations may have unique physical characteristics that may be inaccurately assessed by the AI technologies.

Secondly, as AI explodes in complexity, deciphering the “black box,” referring to a model’s opaque decision-making process, has become exponentially harder[19-21]. Physicians who rely upon AI-based tools may have difficulty justifying decisions, improving models, and gaining new insights[21]. Numerous explainable AI models have been proposed in medicine; however, current approaches for image-analysis models report explanations that are too complex to be understood by a user[22,23].

Furthermore, the computational costs restrict further adoption[24]. Despite falling digital technology costs, the World Health Organization notes that access remains inequitable[25]. Thus, the lack of digital infrastructure may limit the use of AI in resource-poor settings[25]. Deep learning systems rely on high-performance GPUs, a dependency that is becoming increasingly costly as the demand for computing power continues to rise[26].

FUTURE WORK

Despite the promising application of AI in VHR, challenges must be addressed to ensure equitable and clinically reliable AI implementation.

Improving dataset diversity is critical to mitigating bias and ensuring AI models function well across diverse patient populations. Additionally, addressing the computational burden and accessibility challenges of AI deployment in VHR is necessary for widespread adoption. Future research should explore lightweight AI models optimized for efficiency, leveraging techniques such as quantization to reduce computational demands[27]. Additionally, cloud-based AI solutions could expand access to AI-powered medical imaging in resource-poor settings[28].

CONCLUSION

AI-powered medical imaging has shown potential for enhancing preoperative planning, intraoperative decision making, and postoperative monitoring for VHR. Through advanced imaging techniques, AI tools can predict complications, optimize surgical planning, and support long-term patient care. The studies in this manuscript highlight the transformative impact of AI on VHR by addressing clinical challenges and personalizing patient management.

However, several limitations must be addressed to realize the benefit of AI in VHR. Across applications, models have struggled with misclassification and decreased precision in rare outcomes. Additionally, the risk of overdiagnosis, particularly in low-prevalence complications, underscores the importance of ongoing validation in real-world settings. Future work should focus on developing large, diverse datasets, improving model interpretability, and developing scalable solutions.

While AI-powered medical imaging in VHR is in its early stages, its progress is promising. With efforts to refine AI systems, the future of VHR holds the potential to improve surgical outcomes, tailor care to individual needs, and bridge technology with compassionate care. These advancements promise to transform both VHR and the broader field of surgical innovation.

DECLARATIONS

Authors’ contributions

Made substantial contributions to the conception and design of the review: Talwar A, Kelshiker AI, Fischer JP

Availability of data and materials

Not applicable.

Financial support and sponsorship

None.

Conflicts of interest

All authors declared that there are no conflicts of interest.

Ethical approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Copyright

© The Author(s) 2025.

REFERENCES

1. Huerta S, Varshney A, Patel PM, Mayo HG, Livingston EH. Biological mesh implants for abdominal hernia repair: US Food and Drug Administration approval process and systematic review of its efficacy. JAMA Surg. 2016;151:374-81.

2. Gillies M, Anthony L, Al-Roubaie A, Rockliff A, Phong J. Trends in incisional and ventral hernia repair: a population analysis from 2001 to 2021. Cureus. 2023;15:e35744.

3. Talwar AA, Desai AA, McAuliffe PB, et al. Optimal computed tomography-based biomarkers for prediction of incisional hernia formation. Hernia. 2024;28:17-24.

4. McAuliffe PB, Desai AA, Talwar AA, et al. Preoperative computed tomography morphological features indicative of incisional hernia formation after abdominal surgery. Ann Surg. 2022;276:616-25.

5. Love MW, Warren JA, Davis S, et al. Computed tomography imaging in ventral hernia repair: can we predict the need for myofascial release? Hernia. 2021;25:471-7.

6. Elhage SA, Deerenberg EB, Ayuso SA, et al. Development and validation of image-based deep learning models to predict surgical complexity and complications in abdominal wall reconstruction. JAMA Surg. 2021;156:933-40.

7. Taye MM. Theoretical understanding of convolutional neural network: concepts, architectures, applications, future directions. Computation. 2023;11:52.

8. O’Shea K, Nash R. An introduction to convolutional neural networks. arXiv. 2015;arXiv:1511.08458. Available from http://arxiv.org/abs/1511.08458 [accessed 4 August 2025].

9. Ayuso SA, Elhage SA, Zhang Y, et al. Predicting rare outcomes in abdominal wall reconstruction using image-based deep learning models. Surgery. 2023;173:748-55.

10. Wilson HH, Ma C, Ku D, et al. Deep learning model utilizing clinical data alone outperforms image-based model for hernia recurrence following abdominal wall reconstruction with long-term follow up. Surg Endosc. 2024;38:3984-91.

11. Bamba Y, Ogawa S, Itabashi M, et al. Object and anatomical feature recognition in surgical video images based on a convolutional neural network. Int J Comput Assist Radiol Surg. 2021;16:2045-54.

12. Tabja Bortesi JP, Ranisau J, Di S, et al. Machine learning approaches for the image-based identification of surgical wound infections: scoping review. J Med Internet Res. 2024;26:e52880.

13. Tanner J, Rochon M, Harris R, et al. Digital wound monitoring with artificial intelligence to prioritise surgical wounds in cardiac surgery patients for priority or standard review: protocol for a randomised feasibility trial (WISDOM). BMJ Open. 2024;14:e086486.

14. McLean KA, Sgrò A, Brown LR, et al. Evaluation of remote digital postoperative wound monitoring in routine surgical practice. NPJ Digit Med. 2023;6:85.

15. Rochon M, Tanner J, Jurkiewicz J, et al. Wound imaging software and digital platform to assist review of surgical wounds using patient smartphones: the development and evaluation of artificial intelligence (WISDOM AI study). PLoS One. 2024;19:e0315384.

16. Fletcher RR, Schneider G, Bikorimana L, et al. The use of mobile thermal imaging and deep learning for prediction of surgical site infection. In: 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC); 2021 Nov 1-5; Mexico. New York: IEEE; 2021. pp. 5059-62.

17. Mayol J. Transforming abdominal wall surgery with generative artificial intelligence. J Abdom Wall Surg. 2023;2:12419.

18. Talwar A, Shen C, Shin JH. Natural language processing in plastic surgery patient consultations. Art Int Surg. 2025;5:46-52.

21. Pierce RL, Van Biesen W, Van Cauwenberge D, Decruyenaere J, Sterckx S. Explainability in medicine in an era of AI-based clinical decision support systems. Front Genet. 2022;13:903600.

22. Ghassemi M, Oakden-Rayner L, Beam AL. The false hope of current approaches to explainable artificial intelligence in health care. Lancet Digit Health. 2021;3:e745-50.

23. Reddy S. Explainability and artificial intelligence in medicine. Lancet Digit Health. 2022;4:e214-5.

24. Gaetani M, Mazwi M, Balaci H, Greer R, Maratta C. Artificial intelligence in medicine and the pursuit of environmentally responsible science. Lancet Digit Health. 2024;6:e438-40.

25. World Health Organization. Ethics and governance of artificial intelligence for health. Geneva: World Health Organization; 2021. pp. xi, 34. Available from https://www.who.int/publications/i/item/9789240029200. [Last accessed on 4 Aug 2025].

26. Thompson N, Greenewald K, Lee K, Manso GF. The computational limits of deep learning. Proceedings of the Ninth Computing within Limits Conference; 2023 June 13-15; Virtual. New York: ACM; 2023.

27. Abid A, Sinha P, Harpale A, Gichoya J, Purkayastha S. Optimizing medical image classification models for edge devices. In: Matsui K, Omatu S, Yigitcanlar T, González SR, Editors. Distributed Computing and Artificial Intelligence, Volume 1: 18th International Conference. Proceedings of the 18th International Conference on Distributed Computing and Artificial Intelligence; 2021 Oct 6-8; Salamanca, Spain. Cham: Springer; 2022. pp 77-87.

Cite This Article

How to Cite

Download Citation

Export Citation File:

Type of Import

Tips on Downloading Citation

Citation Manager File Format

Type of Import

Direct Import: When the Direct Import option is selected (the default state), a dialogue box will give you the option to Save or Open the downloaded citation data. Choosing Open will either launch your citation manager or give you a choice of applications with which to use the metadata. The Save option saves the file locally for later use.

Indirect Import: When the Indirect Import option is selected, the metadata is displayed and may be copied and pasted as needed.

About This Article

Special Issue

Copyright

Data & Comments

Data

Comments

Comments must be written in English. Spam, offensive content, impersonation, and private information will not be permitted. If any comment is reported and identified as inappropriate content by OAE staff, the comment will be removed without notice. If you have any queries or need any help, please contact us at [email protected].