fig3

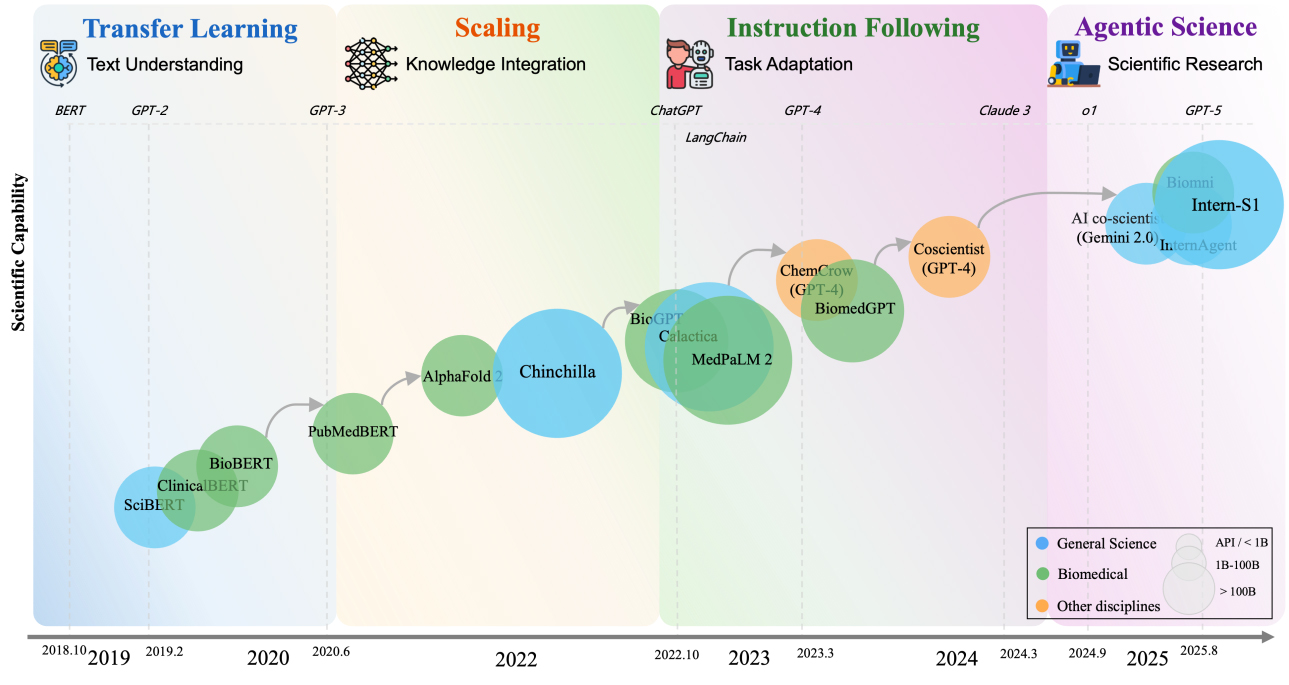

Figure 3. Evolution of Sci-LLMs reveals four paradigm shifts from 2018 to 2025. This figure is quoted from Hu et al.[24]. Sci-LLM: Scientific Large Language Model; SciBERT: a pretrained language model for scientific text based on BERT (Bidirectional Encoder Representation from Transformers); BioBERT: a biomedical-domain language model pre-trained on domain corpora; PubMedBERT: a biomedical language model whose train set covers broader vocabulary; AlphaFold: a groundbreaking AI tool developed by Google DeepMind that predicts the 3D structures of proteins with high accuracy; Chinchilla: a family of large language models developed by the research team at Google DeepMind; MedPaLM-2: Medical Pathways Language Model 2, a medical domain fine-tuned LLM; BiomedGPT: Biomedical GenerativePre-trained Transformer, an open-source and lightweight vision–language foundation model, designed as a generalist capable of performing various biomedical tasks; Intern-S1: The Shanghai AI Laboratory has released a scientific multimodal model which integrates scientific data such as protein sequences, genomes, chemical molecular formulas, and electroencephalography (EEG) signals.