Volume 3, Issue 1 (2023) – 5 articles

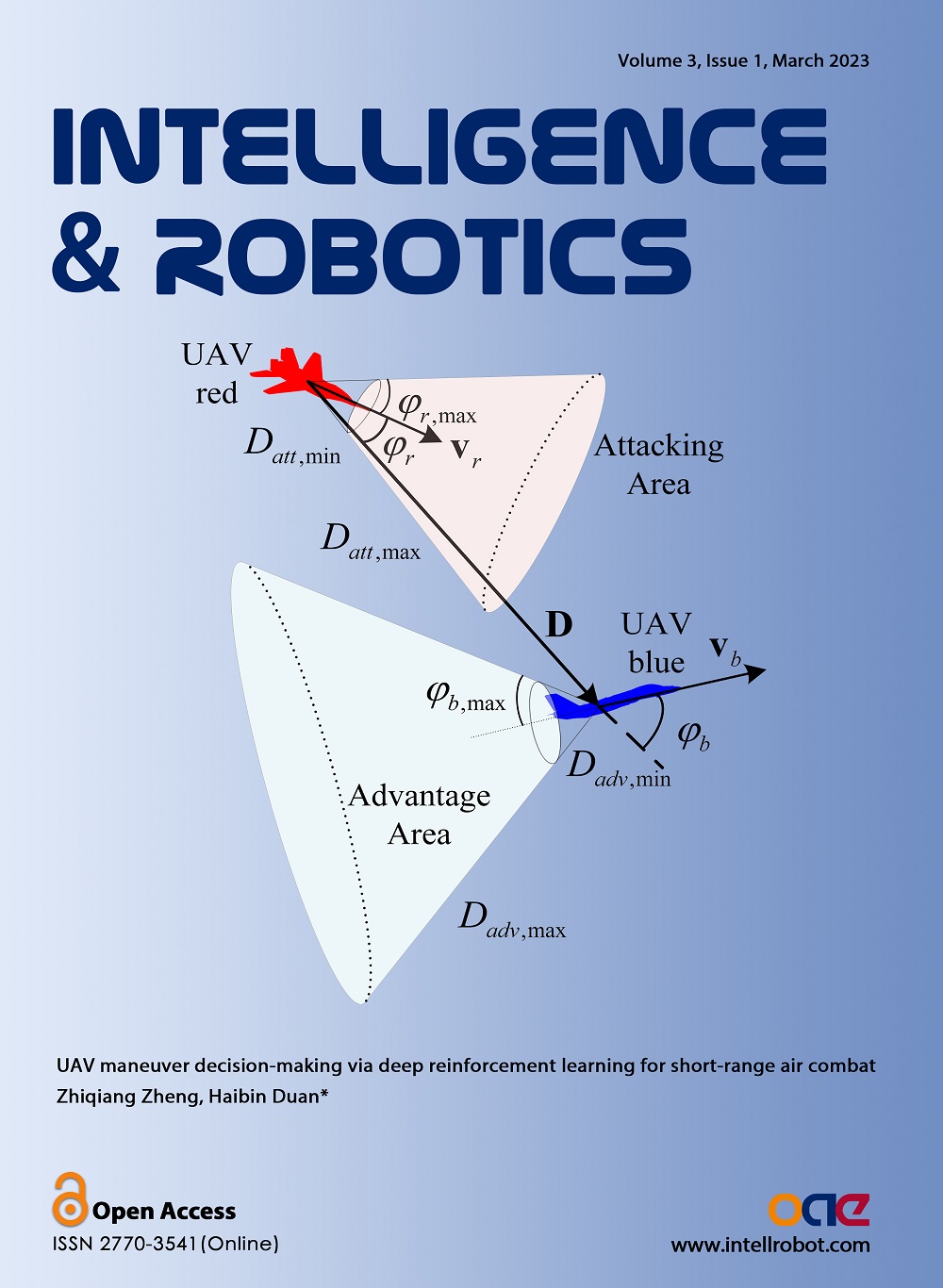

Cover Picture: The unmanned aerial vehicle (UAV) has been applied in unmanned air combat because of its flexibility and practicality. The short-range air combat situation is rapidly changing, and the UAV has to make the autonomous maneuver decision as quickly as possible. In this paper, a type of short-range air combat maneuver decision method based on deep reinforcement learning is proposed. Firstly, the combat environment, including UAV motion model and the position and velocity relationships, is described. On this basic, the combat process is established. Secondly, some improved points based on proximal policy optimization (PPO) are proposed to enhance the maneuver decision-making ability. The gate recurrent unit (GRU) can help PPO make decisions with continuous timestep data. The actor network's input is the observation of UAV, however, the input of the critic network, named state, includes the blood values which cannot be observed directly. In addition, the action space with 15 basic actions and well-designed reward function are proposed to combine the air combat environment and PPO. In particular, the reward function is divided into dense reward, event reward and end-game reward to ensure the training feasibility. The training process is composed of three phases to shorten the training time. Finally, the designed maneuver decision method is verified through the ablation study and confrontment tests. The results show that the UAV with the proposed maneuver decision method can obtain an effective action policy to make a more flexible decision in air combat.

view this paper

Read Online Viewed:

Download This Issue Viewed: